Table of Contents

Why LLM-Independent Memory Layers Are the Future of AI Architecture

In the fast-moving world of Large Language Models, the technology landscape is constantly shifting. New models with improved context windows, enhanced tool-calling capabilities, and optimized inference performance appear regularly. For enterprises, this raises a critical question: How do we build AI systems that don't require complete rebuilds with every model change?

The answer lies in a clear architectural decision: LLM-independent memory layers. While language models are interchangeable and primarily serve as reasoning engines, the durable enterprise value resides in data, tools, and retrieval mechanisms. This insight is relevant not just for large corporations, but for virtually every company seriously working with AI.

At Orbitype, we treat LLMs, databases, storage, and compute as equal building blocks in an open ecosystem. All components are connected via standardized APIs, without proprietary formats that would lead to vendor lock-in. This architectural decision enables us to respond flexibly to new developments while protecting investments in data and tooling.

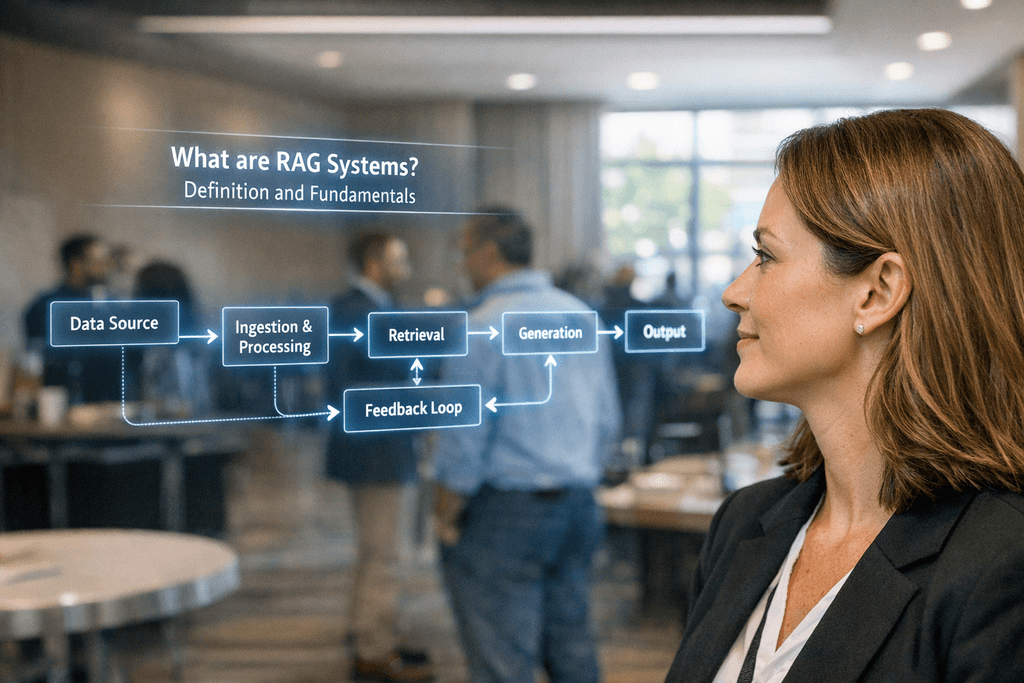

RAG as a Tool in Deterministic Workflows: Precision Over Context Overload

Retrieval-Augmented Generation (RAG) has established itself as a key component of modern AI systems. However, implementation determines success or failure. Many companies make the mistake of treating RAG as a standalone solution rather than integrating it as a precise tool within larger, deterministic workflows.

In practice, this means: RAG systems should not operate in isolation but as tools within structured processes. We employ ReAct-style agents capable of deciding when and how to deploy RAG retrieval. This approach leads to token-efficient, highly precise retrieval following the principle of "less context, more impact."

The technical implementation rests on several pillars:

- Structured Workflows: Deterministic processes define clear decision points where RAG retrieval is triggered

- Token Efficiency: Instead of loading massive amounts of context into prompts, only the most relevant information is retrieved

- Granular Permissions: Control at the source, tag, and memory level determines what information an agent may and must see

- Precise Retrieval: Hybrid search combines semantic vector search with metadata filtering for optimal results

This architecture enables RAG systems to be deployed scalably and cost-effectively without compromising result quality.

Postgres as Stable Foundation: pgvector and the Future of Graph-RAG

Choosing database technology is one of the most critical architectural decisions when building AI systems. While many vendors rely on specialized vector databases, we have deliberately chosen PostgreSQL as our foundation. This decision is based on several strategic considerations.

PostgreSQL offers unmatched stability and a mature ecosystem. With decades of development and a global community, Postgres is one of the most reliable databases available. The pgvector extension enables native vector search directly in the database, without additional infrastructure.

The technical advantages of pgvector are substantial:

- Native Integration: Vectors are treated as a native data type, no external synchronization needed

- SQL Compatibility: Complex queries combine relational and vector operations in a single statement

- Performance: Millisecond response times even with millions of vectors through optimized index structures (HNSW, IVFFlat)

- Transaction Safety: ACID guarantees even for vector operations

- Cost Efficiency: No additional licensing costs or specialized infrastructure

For highly interconnected knowledge structures, we are additionally evaluating Graph-RAG approaches. These extend classic RAG with the ability to model and search complex relationships between entities. Particularly for enterprise knowledge bases with many cross-references and dependencies, Graph-RAG offers significant advantages over purely vector-based search.

Fine-Tuning vs. RAG: When Custom Models Make Sense

The question "Fine-tuning or RAG?" is intensely debated in the AI community. Our position is based on practical experience: Fine-tuning should primarily be used for tone and behavior, not as a knowledge store.

The reasons are pragmatic:

- Rapid Aging: Knowledge baked into models becomes outdated quickly and is difficult to update

- Difficult Control: It's unclear what knowledge the model has actually learned and how reliably it can be retrieved

- High Costs: Every model change requires re-fine-tuning with considerable effort

- Lack of Transparency: No traceability of where information comes from (no source attribution)

RAG systems solve these problems elegantly: Knowledge remains in structured databases, is always updatable, and every answer can be traced back to its sources.

When does custom training with domain-specific fine-tuning make sense? Only when the use case is so specialized and differentiated that large providers cannot realistically optimize for it. Once you've collected enough high-quality domain data, custom models can outperform general-purpose LLMs under specific environmental or operational constraints.

Another advantage: You're less exposed to the "latest model race" since you can iterate on your own schedule. Before reaching this data threshold, however, strong general models plus good prompting, tooling, and retrieval typically deliver better results at far lower cost and complexity.

The good news: Everything you build in tooling and memory layers ports cleanly to custom models later. So there's no wasted work.

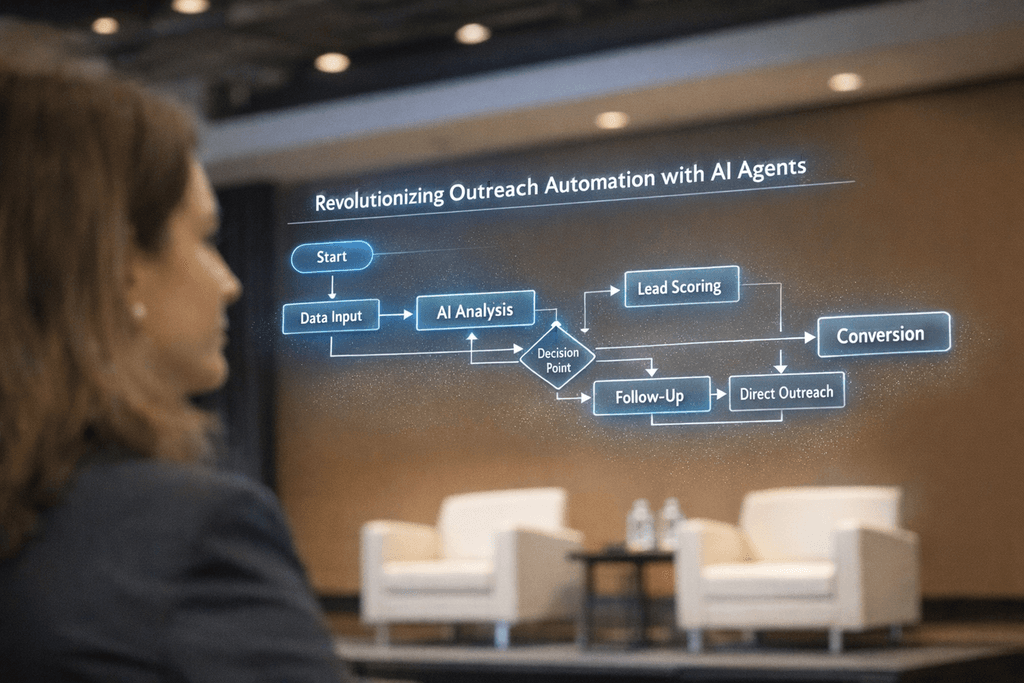

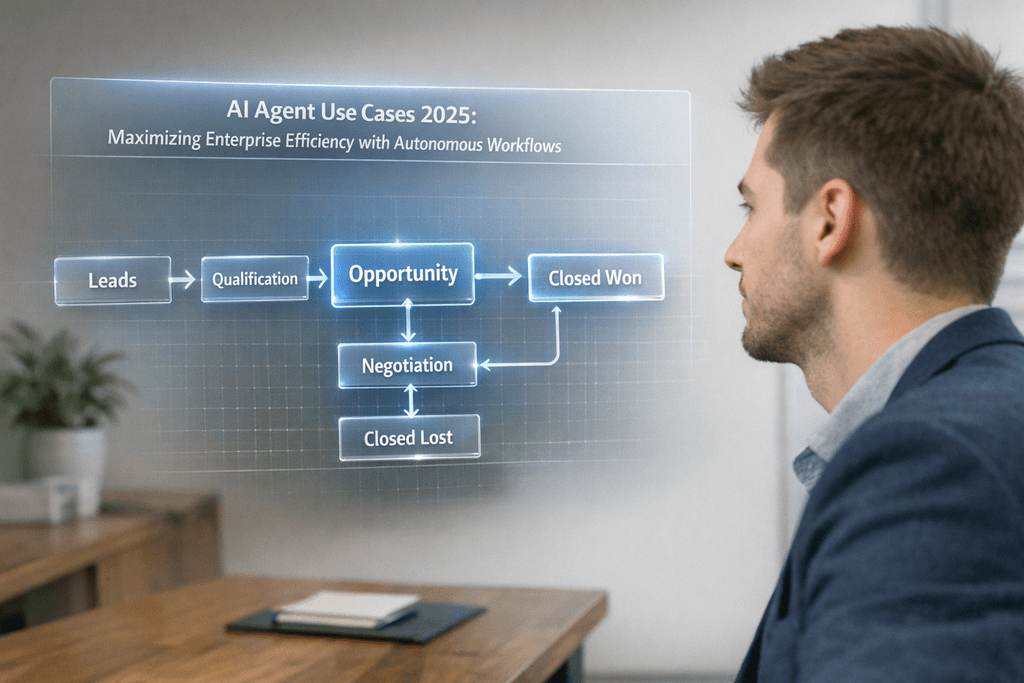

Multi-Agent Architectures: Central Vector Database with Permissions

In many production AI setups, it's not a single agent running, but an ecosystem of specialized agents accessing a shared knowledge base. This architecture offers significant advantages over isolated systems.

A central vector database with granular permissions serves as a shared knowledge layer, connected to multiple agents with different roles. Important: Often workflows run completely without agents when deterministic processes suffice.

The agent typology in such systems:

- Execution-focused Agents: Query, Decide, Act - these agents perform concrete tasks (e.g., email response, data extraction, API calls)

- RAG-Improvement Agents: Research, Condensation, Structuring, Quality Checks, Deduplication - these agents continuously improve the knowledge base itself

The permission system is central: Each agent sees only the information it may and must see. This is enforced at multiple levels:

- Source Level: Access to specific data sources (e.g., HR documents only for HR agents)

- Tag Level: Filtering by metadata and categories

- Memory Level: Access to specific conversation or session memories

This architecture enables complex AI agent workflows to be operated securely and scalably, without sensitive information falling into the wrong hands.

The elegant part: Everything you build in the tooling and memory layers ports cleanly to custom models later. So there's no wasted work if you later want to switch to your own models.

Practical Implementation: From Theory to Production Solution

Implementing an LLM-independent memory architecture may sound complex, but with the right tools and methods, it's quite achievable. Here we show a battle-tested approach.

Phase 1: Database Setup with pgvector

The first step is setting up a PostgreSQL database with the pgvector extension. In Orbitype, this happens with one click; alternatively, an existing Postgres instance can be extended:

- Installation of the pgvector extension

- Creation of tables for documents, embeddings, and metadata

- Setup of HNSW or IVFFlat indexes for performant vector search

- Definition of permission structures at table and row level

Phase 2: Embedding Pipeline

Documents and knowledge sources must be converted into vectors. Best practices:

- Chunking strategy: Split documents into meaningful sections (300-500 tokens)

- Overlap: 10-20% overlap between chunks for context preservation

- Metadata: Store source, timestamp, tags, permissions with each chunk

- Embedding model: text-embedding-3-large or domain-specific alternatives

Phase 3: Retrieval Layer

The retrieval layer combines various search techniques:

- Hybrid search: Vector search + keyword matching + metadata filtering

- Reranking: Two-stage retrieval with reranking model for higher precision

- Permission filtering: Automatic filtering based on agent role

Phase 4: Agent Integration

Agents are implemented as ReAct-style loops that can use RAG as a tool. In modern AI agent frameworks, this is done via tool-calling interfaces that allow the LLM to decide when retrieval is needed.

This architecture is production-ready, scalable, and - most importantly - independent of the LLM used. Switching from GPT-4 to Claude or an open-source model requires only minimal adjustments.

Performance Optimization and Scaling of RAG Systems

A production RAG system must not only be functionally correct but also remain performant under load. Optimization occurs at multiple levels.

Index Optimization

Choosing the right vector index is crucial for performance:

- HNSW (Hierarchical Navigable Small World): Best choice for most use cases, excellent balance between speed and precision

- IVFFlat: Suitable for very large datasets, slightly lower precision but significantly faster with millions of vectors

- Parameter Tuning: ef_construction, ef_search, and m parameters influence the trade-off between speed and quality

Caching Strategies

Intelligent caching significantly reduces latency:

- Embedding cache: Frequently searched queries are pre-computed

- Result cache: Identical search queries return cached results

- Semantic cache: Similar queries use similar results

Batch Processing

For large data volumes, batch processing is essential:

- Parallel embedding generation with worker pools

- Bulk insert operations for new documents

- Asynchronous index updates without downtime

Monitoring and Observability

Production systems require comprehensive monitoring:

- Latency metrics for retrieval operations

- Quality metrics: Precision@k, Recall@k, MRR

- Resource monitoring: CPU, memory, disk I/O

- Business metrics: Success rate of agent tasks, user satisfaction

With these optimizations, RAG systems can scale to millions of documents and thousands of concurrent requests without compromising response quality.

Security and Compliance in Multi-Tenant RAG Systems

Security is not optional in enterprise AI systems. Especially in multi-tenant architectures where multiple customers or departments share the same infrastructure, robust security mechanisms are essential.

Row-Level Security (RLS) in PostgreSQL

PostgreSQL offers native Row-Level Security, perfectly suited for RAG systems:

- Policies define which rows a user or agent may see

- Automatic filtering at database level, no application logic needed

- Performance-optimized through index integration

Encryption at Multiple Levels

- At Rest: Database encryption for stored data

- In Transit: TLS/SSL for all API communication

- Application Level: Additional encryption of sensitive fields

Audit Logging and Compliance

For regulated industries, complete traceability is required:

- Logging of all accesses to sensitive data

- Versioning of documents and change history

- Retention policies for automatic deletion after expiration

- GDPR-compliant data processing with right-to-be-forgotten mechanisms

API Security

- Token-based authentication (JWT, OAuth2)

- Rate limiting per user/agent

- Input validation and sanitization

- CORS policies for web access

Prompt Injection Prevention

RAG systems are potentially vulnerable to prompt injection attacks. Protective measures:

- Strict separation of system prompts and user input

- Input filtering and blacklisting of dangerous patterns

- Output validation before returning to user

- Sandbox execution for tool calling

These security measures are natively integrated in Orbitype and enable secure multi-tenant deployments without additional implementation effort.

Conclusion: Investing in Durable Value Instead of Interchangeable Models

The AI landscape is evolving rapidly, but one truth remains constant: The durable value lies not in the model, but in data, tools, and retrieval mechanisms. Companies that adopt LLM-independent architectures today are creating a future-proof foundation for their AI strategy.

The key insights summarized:

LLMs are interchangeable reasoning engines; the value lies in the memory layer and the orchestration of the systems

RAG as a tool in deterministic workflows offers optimal balance between flexibility and control

PostgreSQL with pgvector provides a stable, scalable foundation without vendor lock-in

Fine-tuning only makes sense for highly specialized use cases, not as a knowledge store

Multi-agent architectures with granular permissions enable secure, scalable systems

At Orbitype, we have consistently implemented these principles. Our platform treats all components as equal, open building blocks connected via APIs. This enables maximum flexibility without proprietary formats or lock-in.

The path forward is clear: Invest in your data infrastructure, build robust retrieval mechanisms, and treat LLMs as interchangeable components. Everything you build in tooling and memory layers remains valuable - regardless of which model is state-of-the-art next year.

The AI revolution is not happening in the models, but in how we structure, store, and make knowledge accessible. Companies that understand this will be the winners of the next decade.

Sources and Further Resources

This article is based on practical experience and current research in AI architectures. The following resources offer further information:

Orbitype Resources:

- What are RAG Systems? Definition and Fundamentals

- AI Agent Use Cases 2025: Maximizing Enterprise Efficiency

- AI Agent Revolution: Guide to Development & Best Practices

- Orbitype Platform

Technical Documentation:

- PostgreSQL pgvector Extension: Official documentation for vector operations in PostgreSQL

- LangChain Framework: Tools for LLM-based applications and RAG systems

- ReAct Pattern: Research paper on Reasoning and Acting in Language Models

Best Practices and Standards:

- OWASP AI Security Guidelines: Security guidelines for AI systems

- GDPR Compliance for AI: Data protection compliance for AI applications

- Multi-Tenant Architecture Patterns: Architecture patterns for multi-tenant systems

For questions about implementation or specific use cases, we are happy to help.